By Gus Rossi, Director, Responsible Technology

With 22 million teens logging onto Instagram daily, child and online safety is at the forefront of state and federal legislatures agendas and gaining momentum. The need to reel in social media companies and address online harms young people face on these platforms is of our immediate concern.

Meta was recently sued by more than three dozen states recently for knowingly using features on Instagram and Facebook to hook children, especially preteens to its platforms. The company claims its social media sites were safe for young people and touts the introduction of tools that support teens and families. However, the recent “Evaluation of Instagram’s Processes for Risks to Minors” conducted by global technology and democracy initiative Reset.Tech found that Instagram under-moderates both pro-restrictive eating disorder content, pro-suicide, and/or pro-self-harm materials and documented several negative dark-patterns in its sign-on experience.

Just a couple weeks ago, the U.S. District Court for the Northern District of California granted a preliminary injunction against the state’s Age-Appropriate Design Code Act (AADC). In siding with the technology industry trade group NetChoice, which sought the injunction, Judge Beth Labson Freeman wrote the act likely does “not pass constitutional muster.”

There is an increasing public reckoning that digital platforms should not be able to harm children, or any of us for that matter, with impunity. According to polling from the Council for Responsible Social Media, 78% of Americans hold social media platforms responsible for a wide-range of harms to child well-being, mental health, and the strength of our democracy. An overwhelming majority of Americans are in favor of legislation that would require social media platforms to be more transparent, and a majority of Democrats and Republicans alike want elected officials to take action.

The AADC requires online platforms to proactively consider how their product design impacts the privacy and safety of children and teens in California. It was an important paradigm shift and pushed back again the standard, harmful model that incentivizes the use of children’s data for profit. The bill was unanimously passed in the California state legislature and signed into law by Governor Gavin Newsom in August 2022.

The bill mandates platforms to:

- Stop collecting unnecessary personal data about kids

- Stop tricking kids with dark patterns, or deceptive design features that manipulate and impede choice

- Stop tracking kids’ location

- Set default privacy settings for kids

- Make it easy for kids to report privacy concerns

- Let kids know when they’re being monitored or tracked

It’s a vital piece of legislation that should be replicated in every state across the U.S. Unfortunately, the AADC has come under fire in the wake of the preliminary injunction. The AADC, though, is not a content bill, it is a design bill that seeks to tackle harmful platform design. Freedom of speech is not freedom of reach, we say. This legislation is greatly needed to stem the tide of invasive practices that are rampant on the internet today and violating children’s privacy.

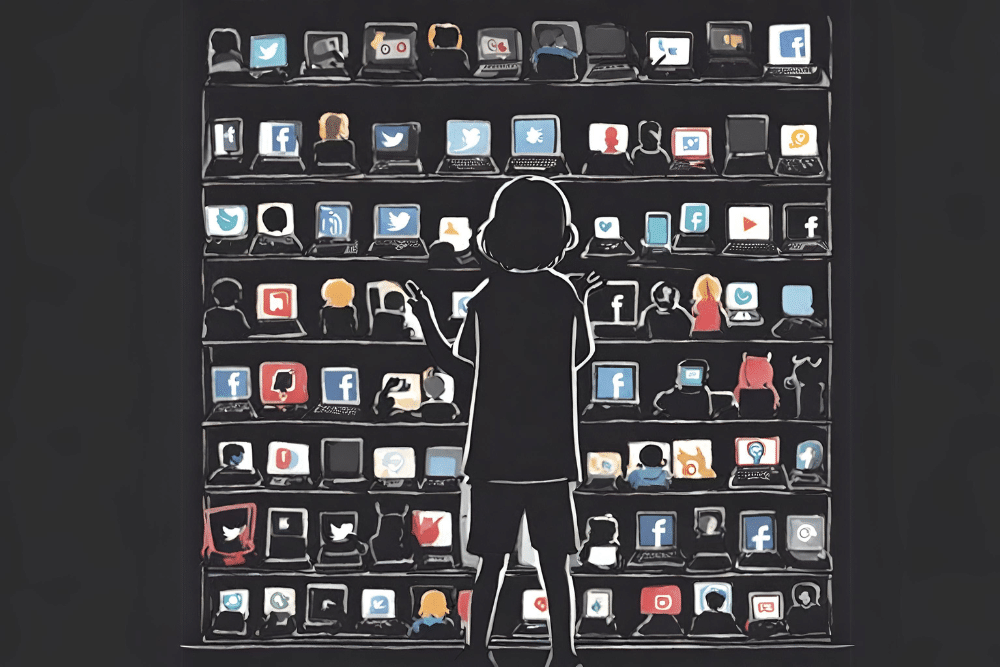

The threats to children are clear in the addictive design of these platforms. Content is set to autoplay, for example, so a child doesn’t even have to choose to press play, the platform feeds the content to them proactively. Deceptive and unfair user interface designs also include the infinite scroll, whereby the user sees a never-ending stream of posts. This keeps kids hooked for longer than intended and provides a greater opportunity to show them advertisements and collect their data, bringing the service more revenue.

Dark patterns are also preying on children, where, for example, the X on an advertisement is so small, the kid accidentally clicks on the ad and is redirected to the ad’s website. Or the X is at the top corner is too small and to see, so the kid can’t get out of the ad. We see these as adult users and not all, but some of us have the savviness to circumvent. Children are clearly the ultimate easy target with these deceiving practices.

We know well that free speech is protected by law. The harmful product design that tracks children and hooks kids to social platforms, however, should not be. Using the first amendment to push back against any and all technology policy that seeks to protect young people, and all of us more generally, is a weaponization of the US’ commitment to free speech.

Omidyar Network in particular is an organization that has its founding in the heart of innovation, but innovation doesn’t happen in a vacuum or without a catalyst. We see tremendous potential for innovation within the bounds of thoughtful regulation that balances rights and freedoms. The AADC is a strong example of that kind of catalyst. We urge the Ninth Circuit to consider the appeal of California Attorney General Rob Bonta.